Main/Portfolio/Augmented Reality App Development for Leef: Virtual Fitting Room

Leef CompanyAugmented Reality App Development for Leef: Virtual Fitting Room

How it started

Leef is a US company producing USB flash drives, adapters, microSD readers, and other digital accessories for smartphones for about 10 years. Recently they opened a new division specializing in the sales of glasses and watches. To promote new products they decided to create a marketing mobile app.

They read our RnD articles: how we made a solution for face recognition, detected road signs and license plates, and recognized receipts. Leef was impressed with our expertise and turned to us for augmented reality app development. The app is aimed at providing an opportunity to try virtual watches right on your hand.

Solution

We started the project in the fall of 2017. We planned to begin with an augmented reality app demo for iOS and then move to doing the Android version.

Our R&D engineers studied different open libraries that could be used for an augmented reality application. They didn’t find any suitable readymade library and offered to build a custom solution.

I couldn’t find the sports car of my dreams so I built it myself. — Ferdinand Porsche

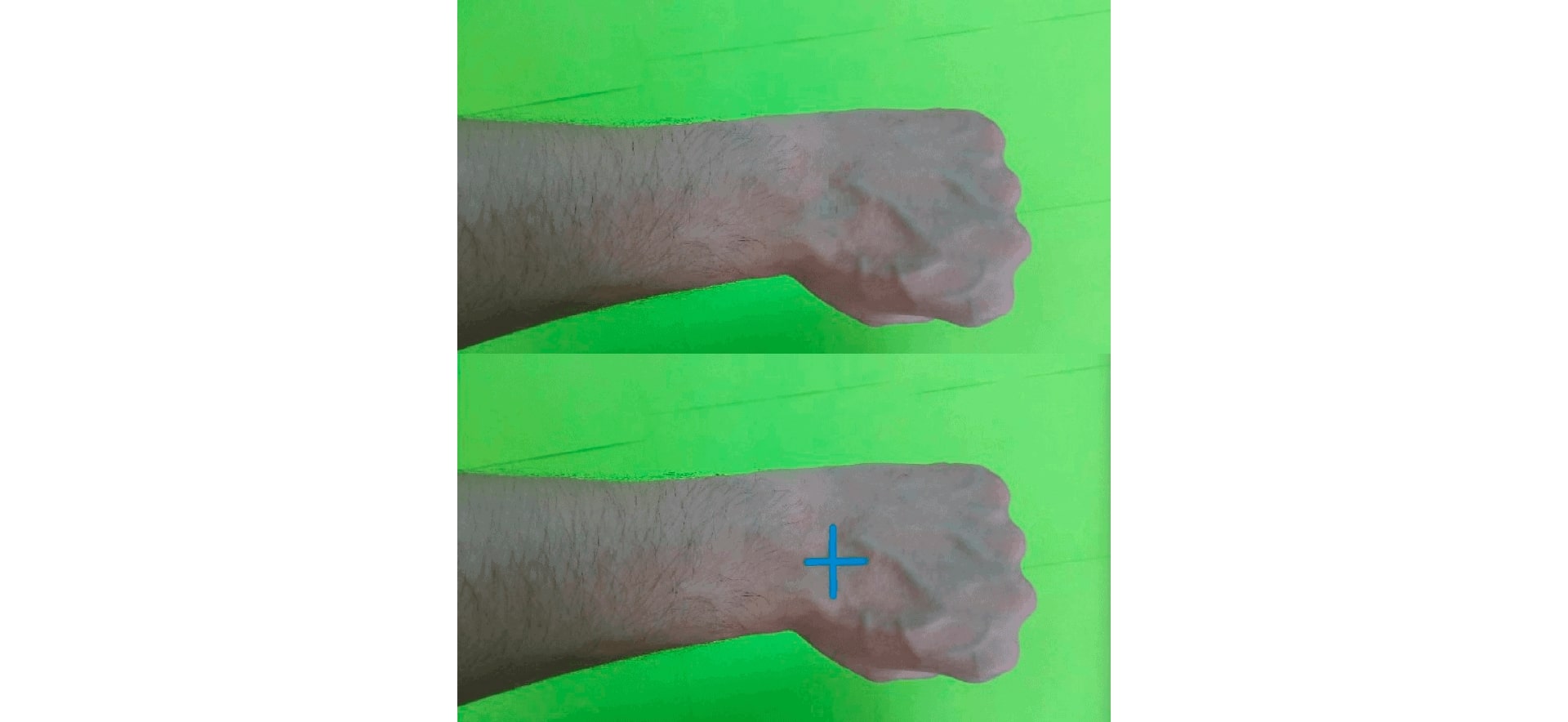

To train a convolutional neural network we prepared our own dataset that was best suited for the requirements. One of our developers even decided to be a research volunteer — he agreed to use his hand for dataset building. We drew a cross on his wrist to detect a watches’ position on his hand. We took photos of the hand from different angles, changed the background to augment the dataset, to make as many variations as possible because the neural network needs lots of data to train.

We slowly increased the number of pictures in the dataset up to 60 thousand photos. During the process, we changed the cross color from green to blue — experiments showed that a bright blue sign is more easy to detect in the background.

Then we developed an algorithm erasing the cross on the hand. We needed this sign to train the neural network whereas users don’t need to see it in the app.

To build a virtual fitting room we ran several tests. We developed an AR application prototype on Python in Linux to test how neural networks will behave on the desktop, and then port it to iOS. We used three neural networks. The first one was responsible for finding the center of the wrist. Second — for detecting the wrist size. And third was trained to recognize a position of the hand to guarantee that virtual watches will move the same way the hand moves.

When we trained all three networks, we imported 3D model of watches in the iOS application and tried to apply the ARKit from Apple but this was a mistake. ARKit is supported only on models starting from the iPhone 6S. Finally, we chose the framework SceneKit which works with older iPhone types.

We developed the demo in 3 months including updates. We widened the dataset and converted 3D models of watches into the new graphics format. Additionally, we began porting the neural network algorithm to Android.

Of course, the experience with iOS helped us a lot but there were some complications with Android. Where iOS deals easily with three neural networks, Android demonstrated low productivity. The system on Android took time to process two pictures: main and additional. As a result, the AR experience on Android turned into a slideshow with watches.

We passed through several options and found a way out — we rebuilt the Android app architecture and trained a new neural network to implement two tasks simultaneously. The result was exciting: the Android app’s speed doubled and there was no need for an additional picture.

Now the demo for iOS and Android allows to try only one model of watches but the next step is providing users with a way to choose any watch from the catalog and see how they look on the hand.

We used Objective-C, Tensorflow, Java, SceneKit, Python: Numpy, OpenCV.

Outcome

The virtual fitting room with augmented reality app development is an unusual and ambitious project. We ran many experiments using different heuristics of convolutional neural networks, improved our skills in 3D technologies, and learned to port neural networks to mobile platforms. We are proud of themselves and the result: we made a demo application with great performance up to 30 frames per second instead of 5 planned.

Stack

-

Objective-C

-

java

java -

Android

Android -

IOS

IOS

Related projects

-

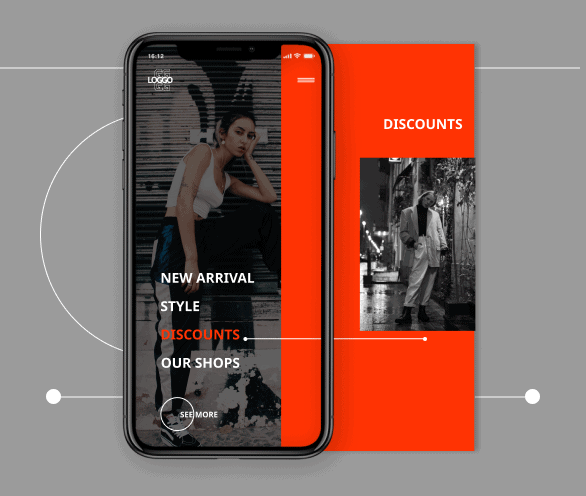

Norwegian Fashion Retailer

Learn more -

Beyond the Rack

Learn more -

Azoft WebChat

Learn more