Developing a Face Recognition System Using Convolutional Neural Network

Founder & CEO at Azoft

Reading time:

Artificial neural networks have become an integral part of our lives and are actively being used in many areas where traditional algorithmic solutions don’t work well or don’t work at all. Neural networks are commonly used for text recognition, automated email spam detection, stock market prediction, contextual online advertising, and more.

One of the most significant and promising areas in which this technology is rapidly evolving is security. For instance, neural networks can be used to monitor suspicious banking transactions, as well as in video surveillance systems or CCTV. Azoft R&D team has experience with such technology: we are currently developing a neural network for face recognition software. According to our client’s requirements, the facial recognition system should be sufficiently invariant to a range of lighting conditions, face position in space, and changing facial expressions.

The system works via a security camera on a relatively small the number (dozens) of people and should be able to consistently distinguish strangers from the people it has been trained to recognize. Our work on the solution consisted of three main phases: data preparation, neural network training, and recognition.

During the preparation phase, we recorded 300 original images of each person that the system should recognize as “known”. Images with different facial expressions and head position were transformed to a normalized view (minimize position and rotation differences). Based on this data, we generated a larger set of samples using semi-randomized variations of distortion and color filters. As a result, there were 1500-2000 samples per person for neural network training. Whenever the system is receiving a face sample for recognition, it transforms that image to match uniform appearance of the samples that were used for training.

We tried several ways of normalization of the available data. Our system detects the facial features (eyes, nose, chin, cheeks) using the Haar classifier. By analyzing the angle between the eyes, we can compensate for head rotation and select the area of interest to us. Information about head inclination from the previous frame makes it possible to hold the face region while the subject’s head is tilting continuously, minimizing iterations of pre-rotating image received from the camera and sending it to a classifier.

After experimenting with color filters, we settled on a method that normalizes brightness and contrast, finds the average histogram of our dataset and applies it to each image individually (including the images used for recognition). Moreover, distortions such as small rotations, stretching, displacement, and specular (mirror-like) reflection can also be applied to our images. This approach, combined with normalization, significantly reduces the system’s sensitivity to changes in face position.

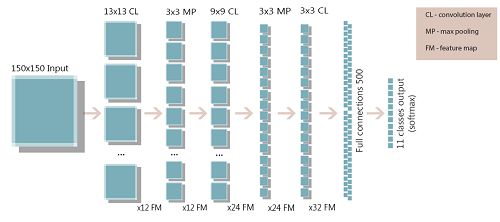

As a tool for actual recognition process, we used internal implementation of Convolutional Neural Network, one of the most relevant options available today, which has already proven itself in the field of image classification and symbol recognition.

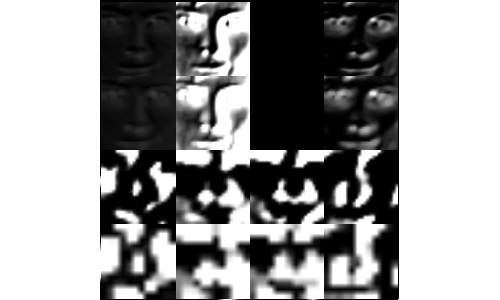

There are several advantages to using Convolutional Neural Networks for our project. First, this type of network is invariant to small movements. Second, it’s the fact that the neural network extracts a set of facial characteristics (feature maps) for each class during the process of training, keeping their relative position in space. We can change the architecture of convolutional network, controlling the number of layers, their size, and the number of feature maps for each layer.

To observe and debug influence of these parameters on the network’s performance, we implemented output of the images generated by Neural net in process of recognition. This approach can be extended by applying De-Convolutional Neural Network — a technique that makes it possible to visualize the contribution of different parts of the input image to feature maps.

Our current face recognition system prototype is trained to recognize faces that are “unknown” to the system, as well as 10 company employees.

As a training set of “unknown” faces, we used the Labeled Faces in the Wild database, as well as 6 sets of faces of employees. Testing was carried out using D-Link DCS 2230 cameras from a distance of 1-4 meters.

According to testing results, our prototype consistently recognizes “known” faces and successfully distinguishes “strangers” in good lighting conditions.

However, the neural network is still sensitive to changes in light direction and overall low light conditions. For more advanced light compensation we are planning to generate a 3D face model, using a smaller set of photos and positions of the main facial features. Produced set would include renders of this model in wide variety of poses and lighting conditions (surface and local shadows) for each subject without actually making thousands of photos.

Azoft team also plans to improve the technique of face localization (right now we are using standard OpenCV cascades), as well as testing using a different camera with recording quality and speed that will not adversely affect facial feature detection and recognition stability.

Another obstacle we need to overcome is a long time necessary to train a convolutional neural network. Even for modest-sized sets of data, it may take a whole day to complete the neural network training. To overcome this shortcoming, we plan to transfer network training to the GPU. This will significantly reduce the time it takes to access and analyze the results of changes in architecture and data. This way, we’ll be able to conduct more experiments in order to determine the optimum data format and network architecture.

Comments